I’ve been writing about various AGI scenarios in the Road to AGI series, considering everything from AGI being imminent, to being delayed by decades, or even being impossible. To me, in early 2025, it’s clear AGI is imminent. The evidence seems overwhelming to me, at least.

So naturally, we should start thinking about what this transition looks like from where we find ourselves today (April 2025). If you recall, there were rumors of LLMs hitting a wall through 2024. Some were expecting an imminent AI winter.

Those rumors were already put to bed with Grok-3, Gemini 2.0, and DeepSeek R1 early in 2025. Since then, all the main players have played their latest cards on the table with Anthropic’s Claude 3.7, Google’s impressive Gemini 2.5, and OpenAI’s recent model rampage with GPT-4.5, GPT-4.1, o4 mini, and the long-awaited o3.

So we start this timeline from a point at which we have an abundance of AI, and the race to AGI is intensifying. Every AI lab wants to show they are winning, or at least racing at the frontier. This is why we have benchmarks.

2025: Moving Beyond Benchmarks

Given this is the current phase we’re living through, I thought it made sense to explore this section in much greater detail than would be warranted for a section in this post. You can read the full thing on Benchmarking our way to AGI.

Saturation point

One of the main issues with benchmarks is that we keep running out of them. The original measure of raw intelligence has been Massive Multitask Language Understanding (“MMLU”), which is made up of multiple-choice questions across many scientific domains. Like most benchmarks, initially, this seemed a herculean task for Large Language Models.

Over the past two years, models have gotten so good at MMLU that a more difficult version, MMLU Pro, was necessary. If models are all hitting close to 100%, you need harder questions to ask. Otherwise, you can’t demonstrate that the new model is in fact better and worth the continued investment.

But can this go on forever? Can we just add more money, more compute, more data, more training, and just keep on getting better AI every time? Some say no, that we’re already hitting a wall.

Breaking the wall

One of the rumors in 2024 was the idea that pre-training was hitting a wall. Even Ilya said it. That the only way forward was test-time compute, which is basically what reasoning models do. In early 2024, a lot of weight was put into benchmarks for reasoning models. For a good while, many thought we might never crack ARC-AGI, at least not with LLMs. Then came OpenAI’s o3, and well, that’s a wrap. The reasoning models starting with the original o1 have given us a new scaling law: test-time compute.

So it’s clear that even if we’ve scoured the internet clean, and base models can’t improve meaningfully, we can just let them think longer about each response, and keep getting better results. Then you can use the thinking model to generate better data for the next base model. So the scaling goes on.

The final benchmark(s)

I didn’t see people shouting we have AGI after o3 beat ARC-AGI. It turned out to be just another benchmark. Meanwhile, we can’t even measure AI's impact on GDP yet. So, something is still missing, but what is that special something?

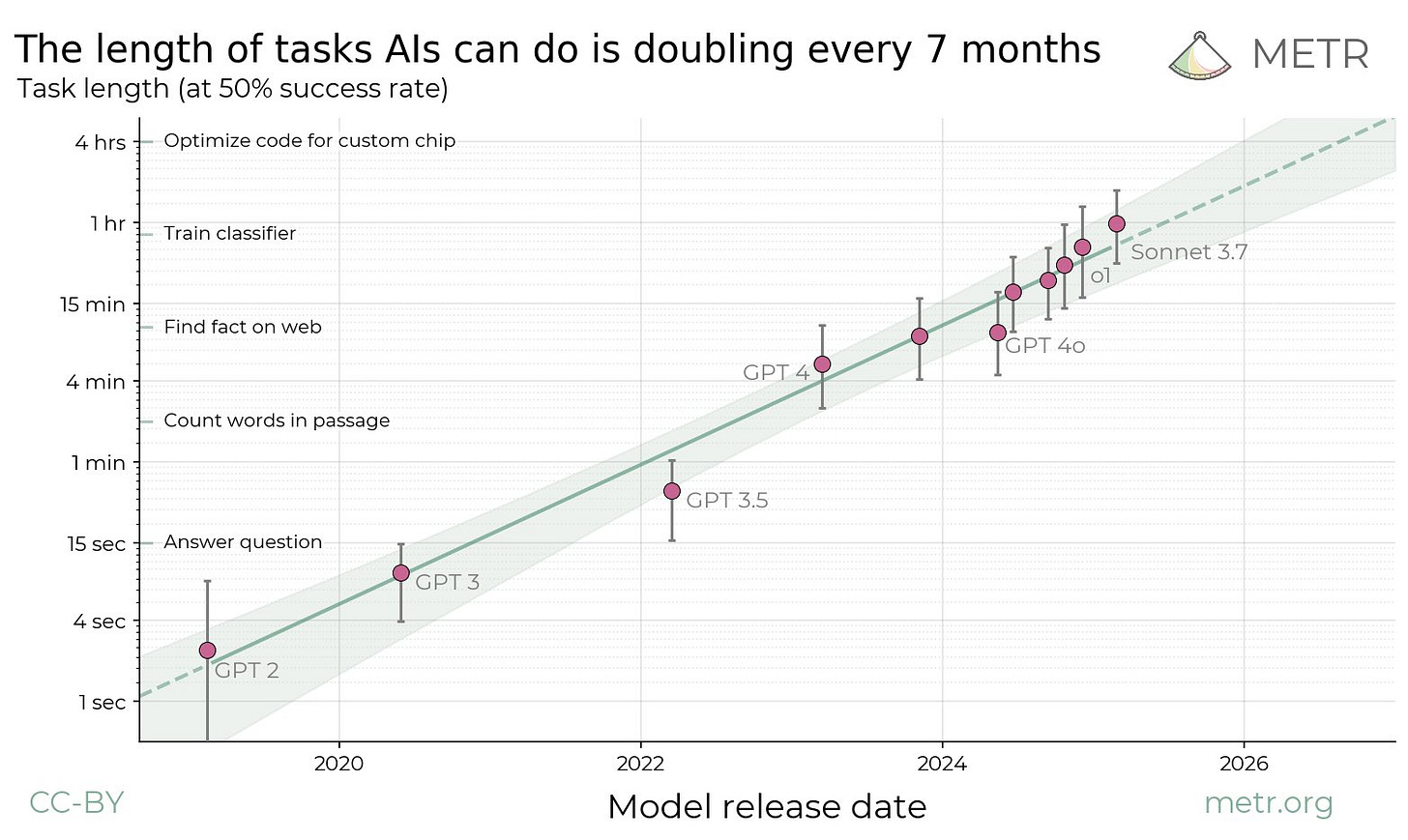

So far in 2025, the biggest update in terms of AI progress came from METR (Model Evaluation & Threat Research). In their recent paper titled Measuring AI Ability to Complete Long Tasks, they give us a new exponential curve, yet another scaling law, and potentially even a new Moore’s Law for agentic AI.

Here’s the chart that is turning heads among AI insiders.

Notice, very importantly, that this is not actually a straight line. Well, it is, but the y-axis is a logarithmic scale. Scaled normally, this plot would be very much exponential.

According to this data, we should expect a doubling along this trajectory every 7 months.

Just pause there for a moment and think this through. During 2025, we will already see AI completing hours worth of human effort in one go. That means not only economically valuable tasks but probably entire jobs.

AGI has entered the chat.

2025-2026: Entering the Event Horizon

If you stop and think about what we’ve just established, it’s pretty jarring. Could it be that agents are the final paradigm that takes us to AGI and beyond?

On a personal level, the most impressive AI experiences I’ve had are with coding agents like Claude Code. It just handles so much structure and complexity with relative ease and incredible speed. Sure, not every line of code generated will be exactly what you wanted, or what’s best, but you can spend a few hours generating a whole sprint’s worth of functionality.

That’s quite something to see happening live, as Claude Code wizzes through reading code, documents, managing task lists, and then begins a sudden flurry of thousands of tokens being counted in front of your eyes, creating new files and code edits in a frenzy of raw intellectual output.

As a software developer, what more do you need from AGI?

Coders are the canaries in the proverbial coal mine. We should expect Anthropic to launch their “virtual worker” (previously “collaborator”) soon, along with domain-specific agents from OpenAI. Followed by every AI lab starting to fill every niche available in the wider economy.

However, nothing is clear in the twilight zone of intelligence.

Is my AGI your AGI?

First, everyone has a different definition of AGI.

In a recent interview on the Dwarkesh Podcast, Microsoft CEO Satya Nadella gave us a more objective benchmark for AGI: economic growth. Economists keep saying AI and AGI will change the labor market, so let’s wait for that 10% growth and then talk.

Then again, I see no reason why we couldn’t first see widespread AI replacement of jobs, keeping GDP growth relatively flat, as higher productivity is offset with decreased consumption. That would certainly qualify as AGI to me, as bleak as it sounds.

My conclusion is that we have already entered the event horizon of AGI, and there is no going back. As accelerationist philosopher Nick Land would say, “what appears to humanity as the history of capitalism is an invasion from the future by an artificial intelligent space that must assemble itself entirely from its enemy’s resources.” The gravity of AGI will pull us into the singularity, kicking and screaming if need be.

Agents will hold our hands into the singularity

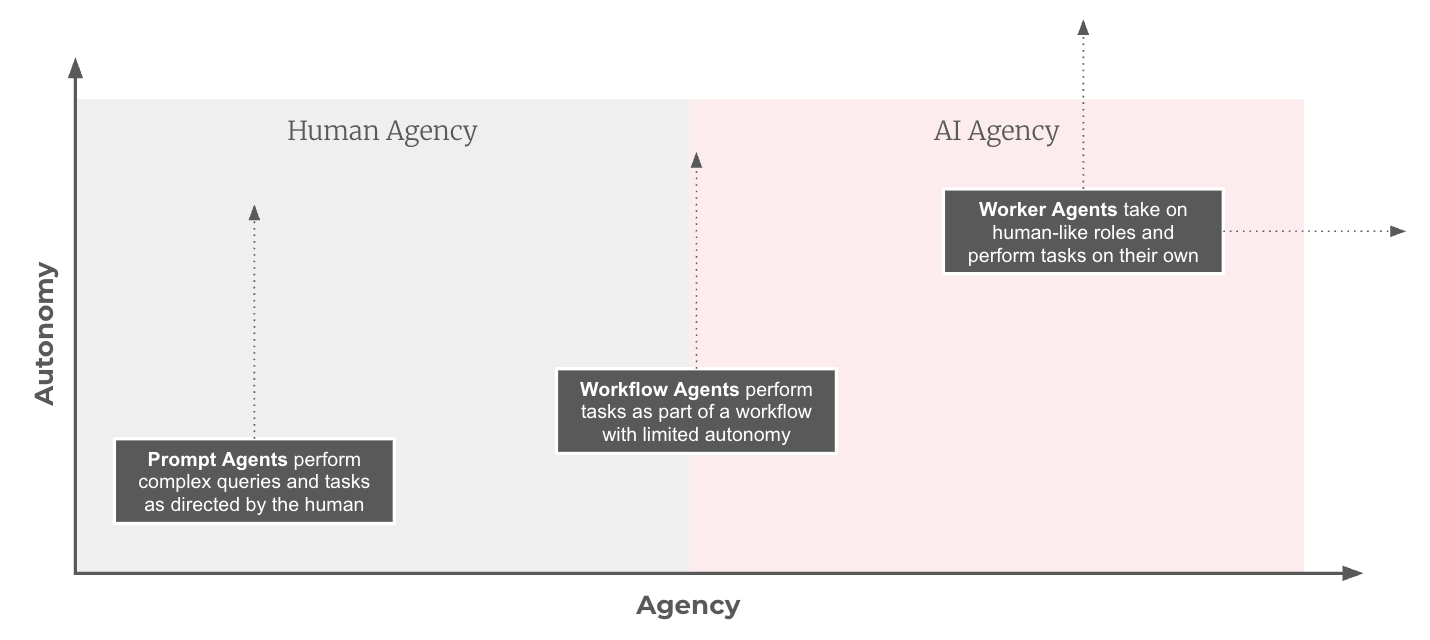

Here’s a framework I created to distinguish the various types of AI agents we’ll be interacting with. We’re all familiar with Prompt Agents starting from ChatGPT and its extensions like Search and Deep Research. But there is a gradual disempowerment as we move further away from human agency and into the realm of artificial agency.

I would keep an eye on quarterly earnings calls in 2025, and I would bet the buzzword bingo word of the year is “agents”. What I term Workflow Agents is the perfect solution to simplifying complicated processes that generate relatively little value. Corporate CFOs cannot resist the opportunity, as the payback period for such automation is nearly instantaneous. You invest a little bit of time and money to simultaneously get rid of expensive SaaS subscriptions and entire teams of employees whose only job is to click around on those bloated websites all day.

Like we’ve seen happen many times before, initially, the AI underperforms the human at a benchmark or given task. Then, there’s a boundary where a team of human + AI is best, but the inevitable conclusion is that at some point, the human simply introduces error into the process and can no longer be justified.

Further, as we introduce these Worker Agents into the economy, we will see what the limits of artificial agency really are. Perhaps they will go much farther than we can currently imagine.

Between 2025 and 2027, we will see AI play an increasingly large role in the economy. We will absolutely see job replacement by AI. Meta already announced they would hire fewer software engineers going forward. Klarna is reportedly never hiring a human again. I’ve personally heard founders saying similar things. We will do more with less, as has always been the capitalist mantra.

While I do foresee some form of white collar implosion ahead, I believe the damage will be limited, at least initially, to the extinction of a few job categories, including customer support and generic administrative work. As Satya himself says, knowledge work will change, but knowledge workers will adapt.

So there will be new jobs, of some form. These cuts will also make the issue political. Unemployment is never a winning strategy, as Trump and Elon are already finding out with DOGE.

So as the economic impact of AI scales up in the next few years, who wins? Are we destined to serve under Emperor of Efficiency, Elon The Great?

It’s NOT Winner Takes All

Thankfully, I believe not. For the longest time, I’ve subscribed to the winner-takes-all scenario. It’s pretty scary for many reasons, and it obviously creates a race dynamic where you have to win at all costs, safety be damned.

At this point, the race dynamic is absolutely happening. Never more so, in fact. Elon is racing against Sam, and America is racing against China. So, how does this turn out differently?

How this dynamic plays out hinges on one key assumption: escape velocity. If you believe the AI lab CEOs like Sam and Dario, then you must raise billions now, and tens of billions soon. Because those who can will soon escape beyond the competitive horizon. Their AI models will be so much better that catching up is simply impossible.

This could turn out to be true, but in my view, the evidence is stacking against this model. Three things have changed my mind on this matter recently.

Open-source: I think the DeepSeek hype is largely overblown, and actually DeepSeek is just Mistral but in China. Everyone said all the same things about Mistral in 2023: open-source, small, cracked teams, the downfall of OpenAI, etc.. The real signal here is that open-source still matters. DeepSeek R1 showed that open-source doesn’t necessarily have to be that much behind frontier models, despite the lack of published research by closed labs like OpenAI. Second, we saw how popular DeepSeek became, not just in AI circles but in public. Sam Altman won’t have that and hinted about upcoming open-source releases. Their Codex coding client is a start. It’s much harder to imagine a singular winner if open-source is a big part of the market for consumers and enterprises.

B2B: Satya Nadella, perhaps biased in his own ways, made a very compelling case for why the markets would never accept one winner. While it certainly seems like OpenAI has reached a kind of Googlesque escape velocity with ChatGPT on the consumer side, the enterprise landscape looks very different. In fact, Anthropic supposedly has more enterprise revenue than OpenAI. The B2B landscape has so much room for everyone: big general models, agents, specialized models, small models, fast models, open-source models, etc… The pie is almost infinite, and there is room for all in the model zoo!

Sovereign AI: Putting these two things together with the geopolitical instability we’ve seen increasingly play out in the news, and there is ample reason for seeking independence. Consumers may be happy to stick with ChatGPT, but companies, industry clusters, cities, nations, and alliances will want to own more of the value chain. Why do we allow private companies with private investors ripping off our data and selling it back to us? The entire value chain, from data, hardware, training, models, and applications, could be shared. One such vision is Emad Mostaque’s Intelligent Internet, where he sees “a new paradigm for AI that democratizes access, fosters collaboration, and aligns technology with human values.” That sounds great to me.

At the end of the day, everyone here is serving their own interests, but that also has the self-reinforcing effect that all opinions will win. Diversity of incentives creates diversity of outcomes.

So at this junction, we accept that we have AGI, in many forms and from many winners. How does the world change tomorrow?

2027-2030: The World of Tomorrow

As we enter the Age of AGI, I don’t actually see a Thanos-like snap of the fingers that changes everything in an instant. The world is messy. So is the economy.

If you invented AGI today, you could obviously make a lot of money very quickly. But the human world is very human. Things like adopting new technologies, board meetings, quarterly budgets, and generally decision-making take time. Even if it were 100% clear that every business should fire all their staff today, it would not happen today. Beyond inefficiencies, we also have laws and regulations.

I realize regulation and safety are not in vogue right now, but their time will come. Unemployment is uncool. So if AI goes too quickly, the politicians will have to rein it in, as epic as it sounds to say the word “accelerate” in press conferences.

Technically, most economically valuable tasks can be automated end-to-end at this point. Let’s call it 2027. That doesn’t mean that they are, in fact, immediately automated. That long-tail could take years, but it’s only a matter of time at this point. There is no “if” to be asked any longer, just “when”.

Quite quickly, the world will start to feel different. We will find out what those new jobs are like, and whether we enjoy doing them. But there’s no putting the genie back in the bottle. We will find out what AI-first feels like and what AI-native products and services can really do. Some of it will be wild, some of it even scary.

With each new generation of algorithms and hardware, the tokens per watt per dollar equation keeps the scaling laws alive. Gradually, Sam Altman’s vision for “intelligence too cheap to meter” will become a reality, and AI will seep into every pore, nook, and cranny of the economy by 2030.

So, what then? What do we do with AI besides killing SaaS?

Atoms to bits and back gain

I believe we all share some key elements of what a bright future looks like. Really, it’s the future of our childhood, from the stories we read and the movies we watched. The problems we want to solve are the problems we’ve lived with for several generations now.

We want the cure for cancer,

we want a base on the moon,

clean energy too cheap to meter,

and maybe flying cars.

This is the future that Peter Thiel and Elon Musk have been talking about. If you squint your eyes and look outside, it would be hard to separate the world of 2025 from the world of 1970. We have trains, planes, and automobiles. Different shapes and some different fuels.

We had the space shuttle and big plans by NASA for space colonization in 1976, but then the oil crisis hit. Read the High Frontier by Gerard O’Neill, and he talks about exploring the solar system and building floating human colonies in space.

While this could happen without AI, the relevant question is what impact will AI have on scientific progress? While I don’t expect the next Nobel prize winners list to be saturated by names of AI models, I find it hard to believe that frontier science will continue on pencil and paper. If we look at software development as a prototype, then we should expect scientists to reshape their routines around AI to maximize output. Not just quantity, but also quality. Demis Hassabis won his Nobel for AI, but the next ones will be won with AI.

Progress in science is also progress in AI. I can only imagine the degree to which researchers at OpenAI are making use of models that only they have direct access to. Surely, the contributions of AI models to each generation of new models are a benchmark of their own.

Yet AI, even in AGI form, still only touches bits directly. To change our physical environment, we will need robots. From recent progress, it seems we won’t be waiting very long.

When talking about these ideas, a friend of mine asked an interesting question. How does any of this change the life of a farmer in Bangladesh by 2040? As Paul Graham noted on Twitter recently, “the future is unevenly distributed”.

One way to answer this is to imagine 100 years progress in the next decade. This is what the founder of the EA movement and noted moral philosopher Will MacAskill has been working on since his fateful liaison with SBF.

This isn’t just progress in science. It’s everything. Just look back and imagine all the progress since 1925 up to 2025, but happening by 1935. Computers, the internet, mobile phones, satellites, the space station, social media, communism, feminism, DEI, and so on. It’s technology, but it’s also ideas.

So, yes, I think even with Tyler Cowen’s reasoning for a slower AI takeoff, it seems such a tsunami of progress would seep through every pore and crack of the global economy by 2040. Yes, even the farmer in Bangladesh. Maybe he’s still farming, or maybe Bangladesh experiences rapid industrialization powered by self-assembling factories. Possibly as an independent nation, possibly as a vassal of the New Rome under Emperor Barron Trump. We’ll see.

There is one big problem with all this progress, though.

Does the future stop where we want, or does the explosion continue beyond what we can imagine or even process?

2030+: The Great Intelligence Plateau

There is one huge, existentially ginormous assumption behind everything I’ve just said. I no longer believe that recursive self-improvement (RSI) is automatic.

A new research org called Forethought, led by the aforementioned Will MacAskill, came out with a paper that describes the mechanisms and dynamics of an intelligence explosion from automated AI research.

I’m saying this will not happen. Not because it cannot happen. Because I choose not to believe it will. Let me explain.

If I’m wrong, and we quickly “foom” past AGI into Artificial Superintelligence (ASI), and humanity is sucked into the dark chaos of the singularity, then all bets are off.

Arguments against foom

But there are reasons not to believe this. The first is anthropic reasoning. No, not talking about Claude here.

If you believe the singularity is nigh, then nothing is worth planning anyway. It’s a black hole by definition, completely beyond human comprehension and control. So it’s also self-defeating. If it happens, it happens. Nothing you do will change that.

But there’s a more compelling argument, too. Economist and long-time AI commentator Robin Hanson has been arguing, before it was cool, that we need not fear AI. On the internet of yesteryear, in 2008, Robin Hanson challenged Eliezer Yudkowsky to a series of lengthy online debates across several essays and their comments.

As fast as this current era of AI progress has happened, Robin points to many decades of research to produce singular capabilities. He recalls, since he was there, how at each new revolution, many researchers claimed a specific capability to unlock everything else. Expert systems and symbolic reasoning were meant to do that, but didn’t. Before transformers, similar things happened with neural networks, deep neural networks, and reinforcement learning.

I personally remember seeing AlphaGO in 2016, thinking this was the final piece. This is how I got into AI, but things didn’t exactly go linearly from there either.

As we’ve seen with just OpenAI, the sheer scale and complexity increases since GPT-2 seem to indicate that the goal of automating all this work also becomes much harder with each generation, keeping that dream out of reach. It may also be economically prohibitive, as OpenAI fatefully set itself on the iterative research agenda. The markets expect constant outputs of new models and cheaper APIs.

Similar arguments have been put forth by Epoch AI founder Ege Erdil here on the Dwarkesh podcast. The Industrial Revolution wasn’t just about horsepower. Horsepower alone doesn’t transform society and the economy. If intelligence were enough, you would expect models like OpenAI’s o3 to already be making novel insights into mathematics and science. But that doesn’t seem to be happening at all, despite all the incredible knowledge and ability that o3 packs.

Ege also presents some more technical arguments, in line with Robin Hanson’s reasoning, that there are still multiple paradigms ahead, such as coherence over long time horizons, agency and autonomy, full multimodal understanding, common sense, and real-world interaction. My take is that we’re probably pretty close will all of the above, but it’s also not impossible that the transformer architecture hits a wall just below the threshold of self-recursion, requiring several more decades of intense research to pass the final hurdle, if it exists.

If they’re wrong and we hit fast take-off from AGI to ASI, then there’s no point in planning anything at all, and it’s a true singularity moment for humanity.

“Even bad fortune is fickle.

Perhaps it will come, perhaps not;

in the meantime, it is not.

So look forward to better things.”

— Seneca

That scenario is by definition out of our control, so why worry about it? It makes sense to focus our energies in a more optimistic direction, where we maintain agency and relevance.

Implications for today

So what to make of all this today? For now, here are a few beliefs I currently hold on this basis.

Returns are not diminishing, they’re accelerating: With the introduction of Worker Agents as drop-in replacements for entry-level knowledge worker jobs, there are tens of billions in revenue potential up for grabs. This will accelerate investment even further.

We’re already on the exponential growth line: While GDP impact still awaits, we can look at tokens per second per dollar, or even IQ points per dollar. We can look at the length of tasks carried out by AI agents, which seems superexponential. Perhaps as AI starts doing more economically valuable work, we focus on dollars generated per token?

Do NOT bet on one winner: Not only is it a bad outcome, it’s a bad strategy. A thriving, rich ecosystem of AI builders, connectors, adapters, and tinkerers should be ahead with plenty of new human jobs (of some kind).

Being a laggard is dangerous: As an individual, it’s your responsibility to adopt AI in your life and work. If you don’t, expect to get left behind and face existential questions in 2026.

Be part of the solution: Now is not the time to put your head in the sand. This may well be the last opportunity to build & invest, and ride the AI wave. Don’t let time run out.

AI-first survive: For existing businesses, becoming AI-first is an urgent imperative for survival. Hire young people who don’t care about rules and risks, and start breaking things with AI. That’s the only future your business has. Business as usual is dead; move forward.

AI-native thrive: We will start to find out what AI-native actually looks like. Cloud-native gave us YouTube, Netflix, and Spotify. Mobile-native gave us Uber and Instagram. What wonders await us?

Prepare for the world of tomorrow: The biggest reason to be positive, upbeat, and excited for the future is that it’s finally happening. As a child of the 80s, I’ve waited my entire life for the future: moon bases, flying cars, and the cure for cancer. Bring it on!