In case you weren’t aware, the smartest people on the planet have raised record-setting war chests with a singular goal in mind: Artificial General Intelligence (AGI).

In the first two essays of this Road to AGI series, we’ve already established that AGI is not only possible but actually inevitable. The logical next question is therefore: AGI when?

Why timelines matter

Recently, Elon Musk’s xAI built the largest single cluster of Nvidia’s H100 GPUs in Texas, in a “superhuman” time of 19 days. He’s now planning to double that by raising another $6 billion to pay Jensen Huang for another batch of his finest Taiwanese wafers. The race is well and truly on.

We know that current systems like GPT-4 were trained with tens of thousands of GPUs, so we’re adding another order of magnitude of compute. It should come as no surprise that Meta, OpenAI, Anthropic, and Google are all deploying six-figure GPU clusters.

How well does the scale-up in compute translate into the models? We’re about to find out. Each order of magnitude we go up also costs more, takes longer to build, and longer to train. The question becomes: how many orders of magnitude until AGI?

That’s the quadrillion dollar question. So are we getting AGI in a few months, a few years, a few decades, or never?

Why should we care? What comes, comes? Well, if we think AGI is going to change the world as we know it, then we’ll need to prepare accordingly. Technology sure changes fast, but society and governance do not.

”AGI is no longer a distant speculation; it’s an impending reality that demands our immediate attention.”

Silicon Valley Takes AGI Seriously—Washington Should Too | TIME

Before we start dropping timelines all over the map, let’s make sure we’re talking about the same thing when we refer to AGI.

Defining the AGI goalpost

In the first post of this series, we defined AGI using the Levels of AGI paper by Google Deepmind.

Level 1: Emerging AGI (we are here now with ChatGPT)

equal to or somewhat better than an unskilled human

Level 2: Competent AGI (the next step)

at least 50th percentile of skilled adults

Level 3: Expert AGI

at least 90th percentile of skilled adults

Level 4: Virtuoso AGI

at least 99th percentile of skilled adults

Level 5: Superhuman AGI

outperforms 100% of humans

Competent AGI is the one to worry about, and it’s the next step on the ladder. Anything beyond that just changes the world faster and more profoundly.

Here’s Jensen Huang articulating one way that Competent AGI might play out (source). Instead of replacing half the workforce, we might see AI just replacing 50% of the tasks for 100% of the workforce. It’s certainly a nicer story to tell the world.

Now let’s start from where we are today and play out the different scenarios. In this first part, we’re going to look at whether AGI is right around the corner. After that, in separate posts, we’ll examine the evidence for AGI before 2030, and finally whether it could take a decade or longer.

AGI is imminent

Some say, that AGI is already here. Sam Altman has been trolling us by dropping a mix of outright memes and mysterious hints. I guess he’s having fun.

However, it seems unlikely he already has AGI. Especially given how many people have left OpenAI, including most of the original team and cofounders. You wouldn’t leave heaven unless you were forced to. In particular, someone like Ilya Sutskever who has started his own competing lab just a few months ago. So that indicates the race is still on. You would also expect some macro-level changes. If you had AGI in the bag, it seems doubtful you would spend any time faffing around with inferior models and products, which we still see OpenAI doing. If anything, you might just see them go quiet.

If we assume AGI isn’t here, then let’s look at what’s around the corner.

Which AI models are coming soon?

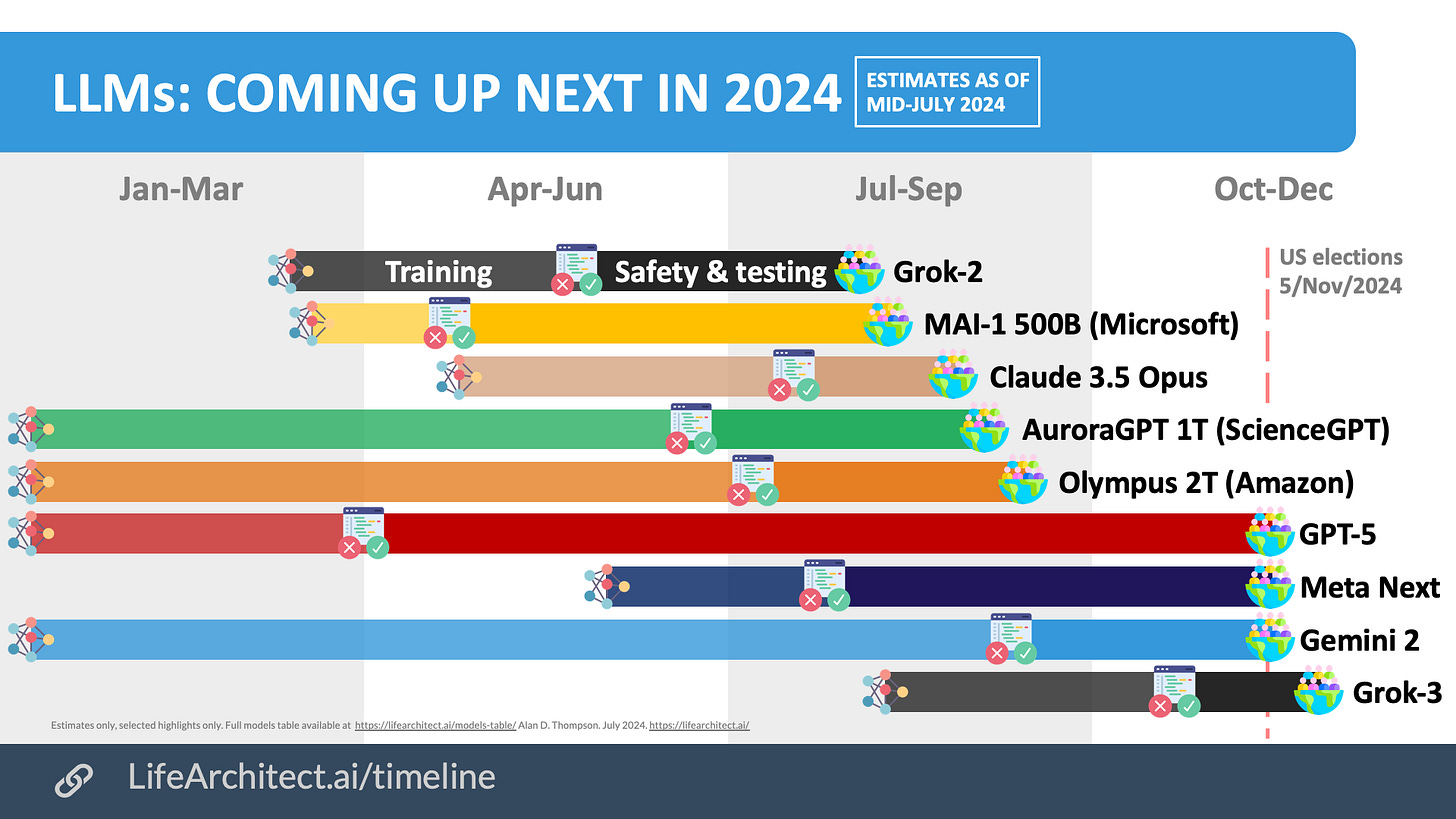

Well, GPT-5 seems overdue. In fact, it should have finished training ages ago. We expected something big in 2024 and so far we got “o1-preview”. More on that later. We’re still waiting for Claude 3.5 Opus, let alone Claude 4. Then there’s Gemini 2. Elon’s shiny new GPU cluster is cooking Grok 3, which he expects to be “the most powerful AI in the world” (source).

Overall, with most of the AI Labs, we seem stuck in between generations. We have GPT-4o and o1-preview instead of GPT-5. We have Claude 3.5 instead of 4, and Gemini 1.5 instead of 2. What gives? Have we hit a wall?

Wall or no wall?

There have been rumors in the past few months that these projects aren’t going well. That returns on benchmark performance are diminishing. The latest rumors are that Grok 3 training was actually halted this week. The obvious reason to do that would be if your checkpoints indicate that the model isn’t improving during training. The other might be that they decided to pause and double the GPU cluster first, to gain a bigger lead over the competition.

If there is fire behind the smoke, then it seems unlikely that AGI is literally around the corner, and some heavy lifting is still required to navigate the path ahead. The strongest signal so far came from Ilya Sutskever, who recently left OpenAI to start Safe Superintelligence Inc.

"Results from scaling up pre-training - the phase of training an AI model that uses a vast amount of unlabeled data to understand language patterns and structures - have plateaued." - Ilya Sutskever, OpenAI co-founder to Reuters

Sam Altman, in his usual style, took to Twitter to respond. He says “there is no wall”. For someone in his position, you would hope for a bit more transparency or even elaboration, but that’s what we get. His Anthropic counterpart Dario Amodei says “I’ve seen no evidence of that so far” that scaling has ended (source).

While I’m more likely to take Ilya at face value than the CEOs in active fundraising mode, we should dig a little deeper. Let’s elaborate on the views of these two gentlemen, that seem to be leading the AGI race at this stage.

Who thinks AGI is imminent?

Besides some anonymous Twitter trolls, you don’t see any legitimate AI insiders predicting AGI in 2024. Probably the closest timelines come from Dario Amodei, CEO of Anthropic.

“It's basically like a generally well educated human, that could be not very far away at all. I think that could happen in two or three years.” – Dario Amodei, August 2023

Here’s Dario just a few days ago on the Lex Fridman podcast reiterating his timelines (source).

It feels counter-intuitive that the self-proclaimed “race to the top” safety champion of the AGI race would have the shortest timelines. Or is this a type of reverse psychology to wake up world leaders to take things seriously? It’s hard to say.

If he is right then shouldn’t we be a) nationalizing those AI labs immediately and b) having crisis meetings across world leaders to coordinate and avoid strategic confrontations at the goal line? Meanwhile, Dario seems more focused on declaring how well everything might go in his essay “Machines of Loving Grace”. Is this really the time for more naive optimism? I’m confused.

Then we have Sam Altman. Here’s what he said about timelines a year ago.

“AGI—a system that surpasses humans in most regards—could be reached sometime in the next four or five years” – Sam Altman, December 2023

His previous timelines were closer to 2030, but in a recent interview, he seems to have updated much closer (source). He isn’t specific, but he seems to indicate that line-of-sight is there. He says “things are going to go a lot faster than people appreciate now”.

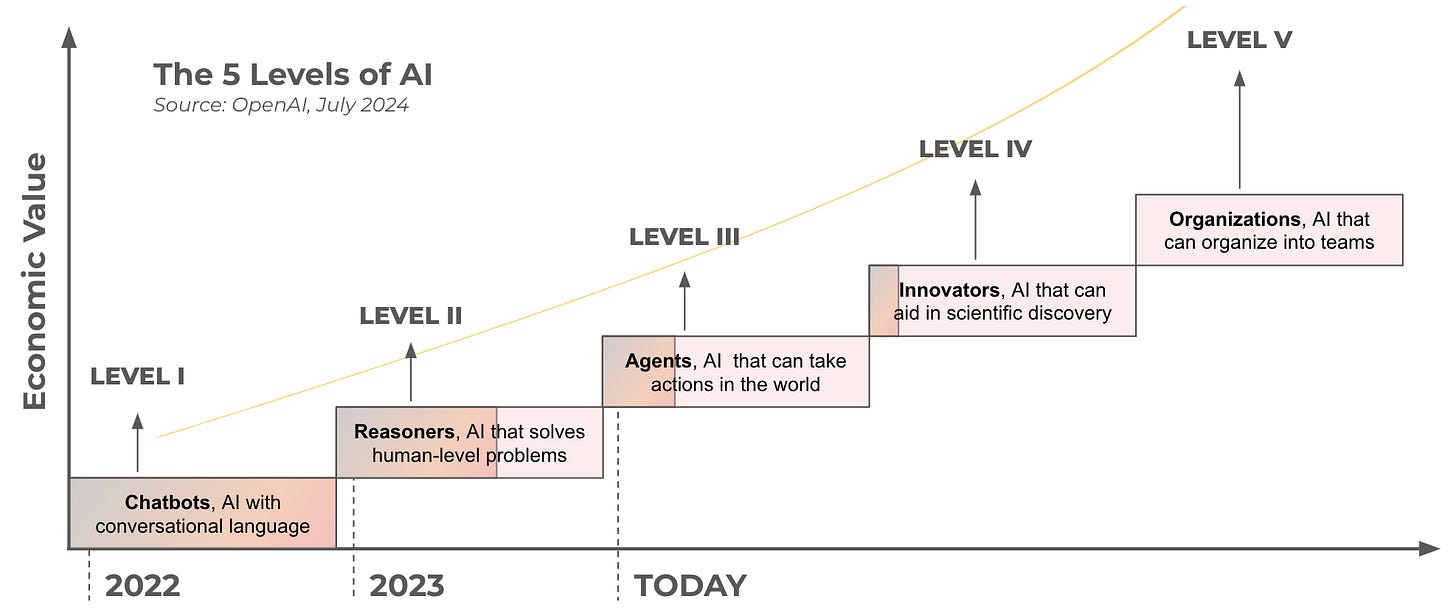

What is he talking about with “levels of intelligence”? These are different from our definitions above, but OpenAI has been talking about levels of intelligence as part of its internal roadmap for a few months now (source). I put together a diagram to visualize how much progress roughly has been made on these levels.

Sam is basically saying they can do Level 3 (Agents) and are getting close to Level 4 (Innovators). There’s an obvious significance to Level 4 because that’s when you start using AI in AI research, which potentially means Level 5, and whatever comes after seems automatic.

What does he mean when he says we can use current models in “creative ways”? This implies a few important things.

First, he’s obviously referring to OpenAI o1. What do we actually know about it? Not a lot, because OpenAI is not very open. We can safely assume it is based on their existing GPT-4 level models but adds a post-training “chain-of-thought” process that allows the AI model to “spend more time thinking before they respond” (source).

Instead of requiring more internet data to train bigger transformers, this approach offers AI labs a new direction to scale. OpenAI says that “constraints on scaling this approach differ substantially from those of LLM pretraining, and we are continuing to investigate them” (source). As we established previously, Reinforcement Learning allowed Deepmind to become superhuman at board games. Could we imagine OpenAI has cracked the secret and can now just scale intelligence at will?

“i heard o2 gets 105% on GPQA” - Sam Altman

You see what I have to deal with? Keeping up with AI now revolves around analysis of cryptic lowercase tweets. Bear with me. Here with “o2” he is hinting that we may in fact never see GPT-5, and instead, the team is doubling down on the new paradigm.

But for complex reasoning tasks this is a significant advancement and represents a new level of AI capability. Given this, we are resetting the counter back to 1 and naming this series OpenAI o1. — OpenAI website

So what about the rest? The way we know whether AI models are any good is through benchmarks. AI has gotten so good so fast, that industry insiders often complain about “saturating the benchmarks”. This simply means all models are scoring so high there’s no differentiation. It also means they are as good as humans in those tasks. So we need harder tasks.

GPQA stands for “Graduate-Level Google-Proof Q&A” and contains questions like this (source).

What is the correct answer to this question: What is the solubility of the stochiometric tricalcium phosphate in a solution with the pH level of a) pH = 9, b) pH = 7 and c) pH = 5 (25 °C)?

The Ksp for Ca3(PO4)2 is 2𝑥10−29, the dissociation constants of phosphoric acid are Ka1 = 7.5𝑥10−3, Ka2 = 6.2𝑥10−8 and Ka3 = 1.8𝑥10−12.

Choices:

(A) 1.96𝑥10−5; 1.35𝑥10−4; 4.37𝑥10−3

(B) 1.67𝑥10−5; 1.51𝑥10−4; 4.81𝑥10−3

(C) 1.54𝑥10−5𝑀; 1.42𝑥10−4; 4.67𝑥10−3

(D) 1.43𝑥10−5; 1.29𝑥10−4; 4.58𝑥10−3

Let’s think step by step:

...MODEL RESPONSE HERE...

Obviously, these are questions that require a high degree of scientific expertise and specialization in humans. How does AI do?

So it’s clear OpenAI is onto something with their new model. So his tweet is hinting at the idea that their new approach could go beyond 100% on this benchmark. Which is of course nonsense. You can’t score above 100% on a test. There is no extra credit section.

What we can take away is that they are focused on this direction now, and see there is more progress ahead. The GPQA benchmark ties right back to their Level 4 definition of intelligence, which makes it a logical milestone to set their targets on.

Summary: How AGI could be imminent

If we look at the evidence above as a whole, it seems to me we should not expect AGI for Christmas this year. However, if OpenAI comes out in 2025 and claims the AGI prize, it’s likely to be a calculated financial move more than the singularity. We already know that Sam Altman is a master schemer that loves 4D chess, and the agreement between OpenAI and Microsoft includes the following poison pill clause.

“Fifth, the board determines when we've attained AGI. Again, by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work. Such a system is excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.” — OpenAI website

So clearly, triggering this clause is in the hands of OpenAI’s board, which is in the hands of Sam Altman. The New York Times recently reported the relationship with Microsoft has become strained on the back of former Deepmind co-founder Suleyman building up Microsoft’s own AI team. The never-ending drama at OpenAI is surely the other half of it.

In summary, OpenAI has:

Introduced a new model family that has different scaling constraints

Defined its own levels of intelligence to reset the goalposts for AGI

Focused on a benchmark that is tied to the next major goalpost

Haven’t yet released the full model for o1-preview

Have a direct financial benefit from announcing AGI

If this happens, expect this to be hotly contested by other AI labs, notably Elon Musk who is now in a position to drive AI policy under the incoming Trump administration.

Next time, we’ll examine a similar line of evidence for AGI by 2030.