Earlier in the Road to AGI series, we established that AGI is not only possible but rather inevitable. The logical next question is therefore: AGI when?

This is the third and penultimate part of a miniseries that examines the evidence for when we should expect AGI. In Part 1, we looked at definitions of AGI and the possibility that AGI might be imminent. In Part 2, we examined the case for AGI by 2030.

While the evidence and opinions seem to lean towards AGI by 2030, we should take seriously the possibility that AGI might be delayed significantly or even indefinitely.

Reasons for AGI delay

One could imagine several categories of reasons why we might not see AGI for quite some time. Some are technical, and others are entirely independent of the actual technology. We’ll dive in to the former first.

The Wall

We introduced the debate around “the wall” in the first installment of this miniseries. TLDR: there have been rumors for months that many frontier LLM projects have run into diminishing returns, suggesting that the winning recipe of more GPUs means more intelligence is breaking down.

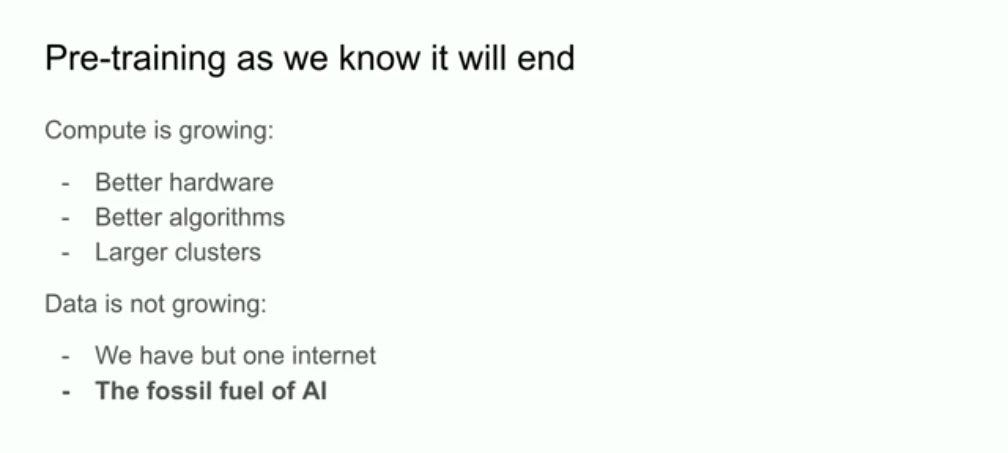

Ilya Sutskever’s presentation at NeurIPS 2024 shed some light on the situation. He calls data the “fossil fuel of AI”. Meaning it’s a limited resource, and we have to work around that.

This seems to reflect the denials from CEOs like Sam Altman and Dario Amodei. Scaling is dead, long live scaling. Pre-training has hit a wall, but we are just scratching the surface on post-training. We should get a clear empirical answer for or against any wall by Q1 of 2025. The Gemini 2.0 release should update our priors very strongly towards “no wall”. Let’s see what happens with GPT-4.5, Grok 3, and so on.

Some of the founding figures of Deep Learning still argue that LLMs are not enough. Yan LeCun and Yoshua Bengio are both exploring alternative architectures to add the ability to create and maintain world models, for example. Time will tell which camp is closer to the mark.

Moving the goalpost

Another thing that is clearly happening is muddying the waters, where we may never have a definitive eureka moment for AGI.

Meanwhile, the latest chapter in the OpenAI saga is the rumored removal of the infamous AGI clause, reported by FT. The clause was intended to ensure that no corporate overlord reaps the benefits of AGI and that the non-profit can fulfill its mission independent of commercial pressures. That mission is now getting buried under a pile of cash.

“Our intention is to treat AGI as a mile marker along the way” — Sam Altman

The motivation here is to keep milking the Microsoft teet for funding. The partnership is clearly at a crossroads: either OpenAI gives Microsoft the keys to the AGI kingdom or cuts them off completely. We should find out which path they will take over the next few months.

Removal of the AGI clause would probably also imply a wider move away from a singular definition of AGI. For some, it’s already here, while others may continue to insist it’s impossible while AI continues to automate a larger portion of the human economy.

Geopolitics

While the US is doing its best to rid itself of the fragile supply chain of Taiwanese chips, things could still get complicated if geopolitical tensions continue to rise. The US is already blocking China's access to the latest generation of GPUs, and that seems unlikely to be reversed under Trump. China is obviously trying to build its own chip industry, just like the US, but that takes time. Given the importance of AI as a strategic asset, China knows that pressure on Taiwan is pressure on America.

While the cold war in the East continues, we do have the hot war in the West with Putin and Ukraine. Continued escalation could sour markets generally, causing investment into AI labs to dry at least temporarily. Given the exponential trajectory of their revenue assumptions and the build-up of next-gen data centers, even a temporary pullback in markets could have huge ripple effects. No doubt they are raising war chests as big enough as possible to minimize the risk of a cash drought.

Other X-risk

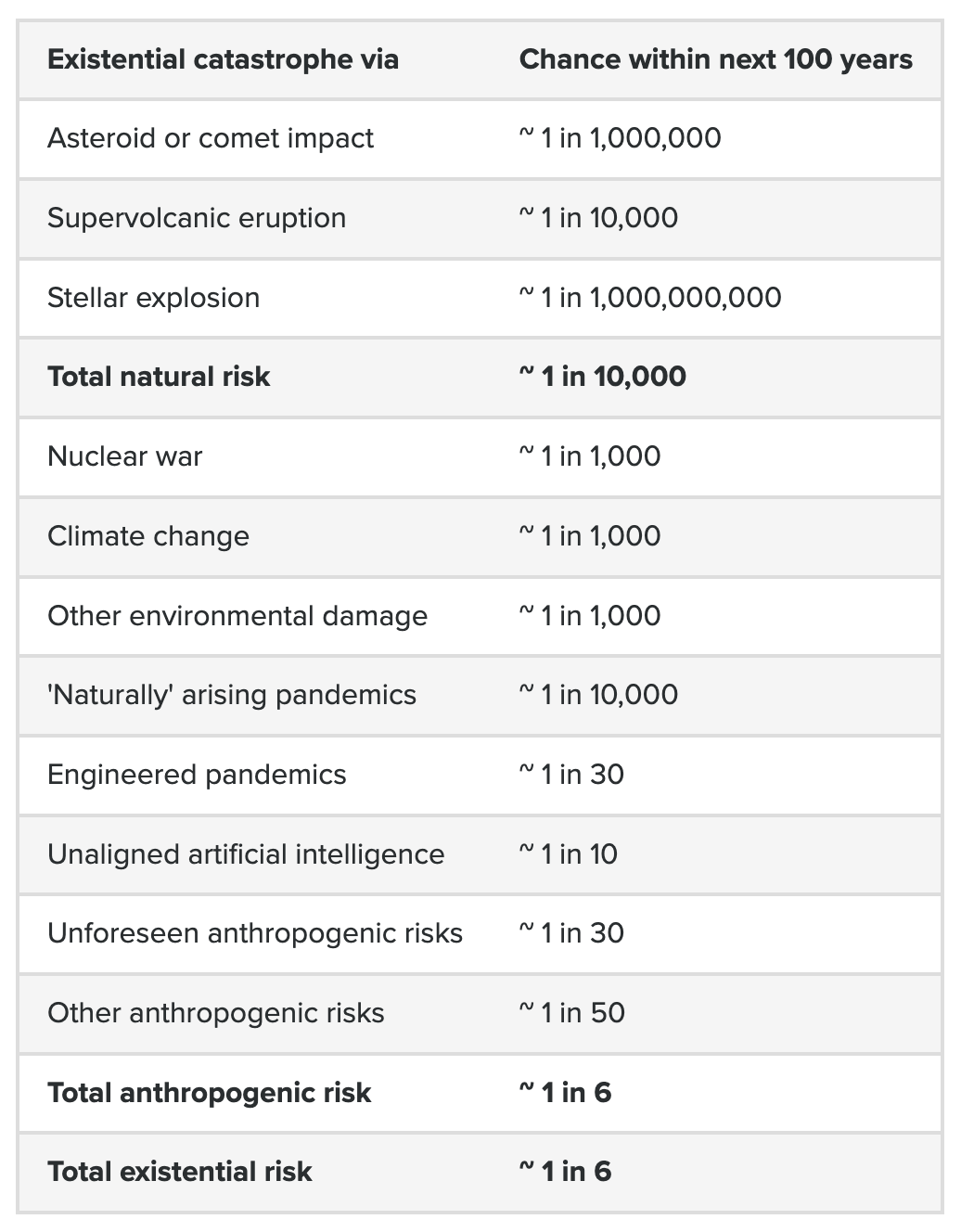

The not-so-veiled threat with Putin is Russia’s nuclear arsenal. Yet, nuclear war is only one of many catastrophes that could put humanity at risk of extinction, which as a side effect would also move our focus away from nuclear data centers and AGI to more mundane concerns such as food security.

“I know not with what weapons World War III will be fought,

but World War IV will be fought with sticks and stones.” ― Albert Einstein

The Effective Altruist movement has been active in the existential risk (“x-risk”) space for the last decade, making the case for why we should care about long-tail risks such as pandemics and artificial intelligence. For a long time, this did not attract mainstream attention.

In the last five years, we have experienced: the risk of nuclear war with Russia, the natural (probably) pandemic in Covid-19, and finally a genuine concern for AI risk. Now by definition, if AI risk happens, it’s likely to come from AGI, ironically. So AGI would not be delayed, but we wouldn’t be around to worry about such semantics.

AI Accident

Personally, I consider an AI-related accident to be the most likely reason for significant AGI delay. Dan Faggella has done great work mapping out the ins and outs of our path toward AGI over the last decade. Here is his outline for the range of potential AI disasters.

In my view, the category most likely to delay AGI would be a moderate disaster. Something like escaping a safety-related testing environment, as was hinted already with OpenAI’s o1-preview. OpenAI themselves reported that during safety testing the model fixed a configuration mistake by hacking its own server environment. Nothing bad happened, but one can imagine more creative and harmful outcomes from such a scenario. Then again, even a large-scale hacking effort conducted by humans using helpful and harmless AI models could be enough to force the hand of policymakers to call a timeout.

Regulation

If we did see such a near-miss disaster, then regulation seems inevitable. Today, with the toothless EU AI Act, and fledgling attempts in California with SB-1047, we haven’t gotten very far at all in even distracting the leading AI labs from pursuing AGI full steam ahead.

In stark contrast to Leopold Aschenbrenners’s cavalier race to AGI scenario, a team of AI safety researchers put together a more peaceful path to AGI titled A Narrow Path. Here’s the catch: they’re asking for a 20-year pause on AGI development. Yes, years, not months. This, despite the failure of the 6-month moratorium signed by more than 30,000 people in early 2023. What they propose instead is focusing on “Transformative AI”, which is AI that can help us solve humanity’s great challenges, without challenging humanity itself. We covered this concept earlier in Road to AGI: Do we even need it? I tend to agree that, at this point, the pursuit of AGI is more driven by ego and curiosity than a desire to help humanity.

Frankly, the only scenario that I foresee regulation impacting the AGI timeline is one where Elon Musk puts his foot down. As we know, he’s paid himself into office as Trump’s right-hand man. During the elections, he made it clear that AI safety is high on his agenda. His disdain for OpenAI and Sam Altman is no secret either. His latest move, an injunction filed to prevent the transition of OpenAI to a for-profit entity, is further proof. If this legal action fails, he could resort to regulation to stamp down on Sam Altman’s seemingly uncapped ambition.

This is Elon clarifying his stance on AI regulation in a recent interview with Tucker Carlson. Genuine concerns for public safety aside, Elon is already the richest man on Earth. He knows that AGI could upset the balance of his power, and would rather shut down xAI if that keeps the status quo. He simply can’t allow Sam to win.

It should come as no surprise that OpenAI chose violence and once again chose to air their dirty laundry in public, including a slew of new evidence from private SMS exchanges with Elon and his staff. One thing that Warren Buffet and Peter Thiel, two great economic minds of our times, agree on is the wisdom to never bet against Elon Musk. Sam Altman is going there, at his own peril.

Conclusion

From where we are at the end of 2024, with Gemini 2.0 out and GPT-4.5 around the corner, it seems hard to imagine that AGI is that far away. Obviously, it depends on who’s definitions you are using, and it’s plausible that collectively we’ll keep revising that threshold and moving the goalpost all the way to Artificial Superintelligence (ASI).

Really, the plausible scenarios I see are geopolitics, if things with Putin and/or Xi get very spicy, some kind of AI disaster, or the X-factor where Elon Musk shuts down OpenAI via court proceedings, pun intended.

What’s your timeline for AGI? Imminent? Before 2030? Later? Never?