Road to AGI: By 2030?

Part 2: Evidence that we should expect AGI by the end of the decade

Earlier in the Road to AGI series, we established that AGI is not only possible but rather inevitable. The logical next question is therefore: AGI when?

This is Part 2 of a miniseries that examines the evidence for when we should expect AGI. In Part 1, we looked at definitions of AGI and the possibility that AGI might be imminent.

Now we focus on the five years remaining in this decade, which might prove to be a turning point for human civilization.

As you’ll see, this is by far the most common prediction across many sources, both by industry leaders, estimates on compute, and analyst forecasts. This is the main scenario you should take seriously. Reminder: This is within the next five years of your life!

Compute available by 2030

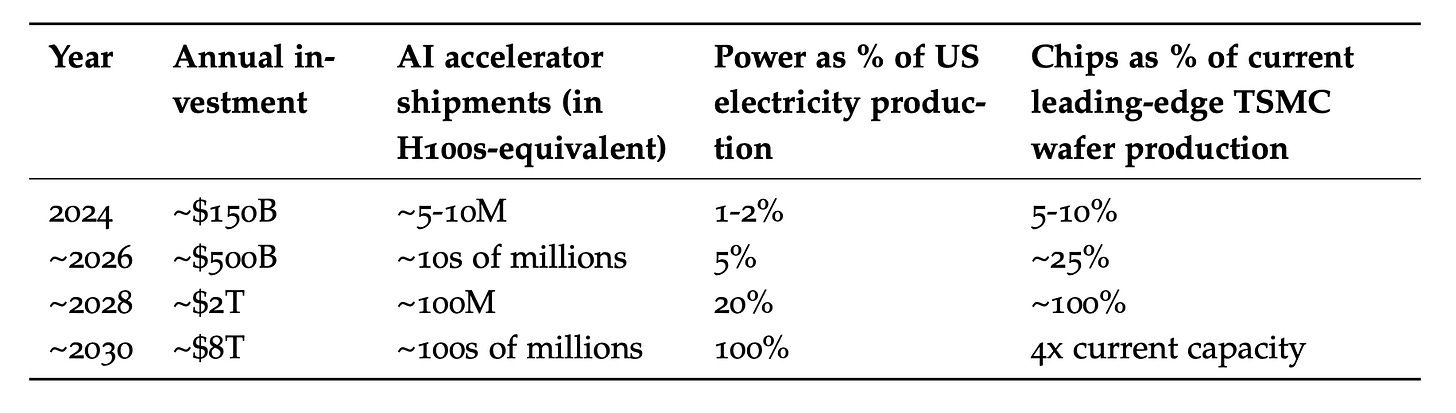

If we start by examining the scale-up in compute, we could make some assumptions about how many GPUs could be produced, how much money could be raised to buy them, and what kind of power would be required to run them.

Leopold Aschenbrenner has done a thorough job of this already, we can pull in his analysis from Situational Awareness.

So what do we know about these assumptions? Sam Altman recently raised $6.6 billion, and so did Elon, and he’s going back for more. Infamously, Sam tried to raise $7 trillion, I suppose, with the aim of leapfroging into 2030. So clearly the money is there, as long as the AI keeps improving and there is no wall or winter to dull investor appetite. We covered that last time, and it seems unlikely that progress will stall, even if detours are required to keep scaling.

What about the other prerequisites: power, chips, and data? Here’s an analysis by Epoch AI, based on an imagined 2030 threshold for compute. This would be the equivalent of the jump in compute we saw from GPT-2 to GPT-4. Granted, this is an arbitrary threshold, but certainly meaningful for our deadline of 2030.

As we already established in the first post in the series, there is enough data. Datasets like Common Crawl and Fineweb are more or less all of the text on the internet. That comes out at something like 15 trillion tokens (think “word”) that you can train your LLM on. So if GPT-4 has 1.76 trillion parameters, at a 7:1 ratio of data to parameters, it’s still not even using 100% of the tokens on the internet.

The problem is that we might run into data quality first. We already know some existing models including Claude 3.5 Sonnet included a significant portion of synthetic data in their training. We also know that models like OpenAI o1 are scaling in a different way that is less reliant training data, by using Reinforcement Learning in post-training.

What about chips, then?

Here’s Leopold again, this time on where we are with GPUs as a portion of total chip output. There have been efforts to start producing TSMC chips in the US, with some recent success. Then again Taiwan just made it clear that latest generation chips will not be made outside Taiwan, so that’s a bummer. Either way, as long as China doesn’t mess with Taiwan it should be feasible to continue scaling up chips and capacity as we go year by year.

That only leaves power. But as Leopold says, this is solvable. We’ve seen AI labs invest in nuclear, including Sam Altman and Google with Oklo, and Amazon with X-energy. Meanwhile, especially under the Trump administration, we might just go with good old-fashioned oil & gas. In fact, that was also Elon’s solution for xAI.

In summary, from a prerequisites perspective, we’re good to go. Do people think AGI is actually doable by 2030?

Predictions for AGI by 2030

Let’s look at a number of different sources that seem to point to the same time horizon. We’ll look at predictions from prediction markets, researchers working on AI, and some of the leaders of the field.

Prediction markets and AGI

Prediction markets have become all the rage now since they predicted the election outcome before mainstream media. The current median timeline for AGI on Metaculus is 2033.

What’s noteworthy here is the shift over time. Before ChatGPT was launched in late 2022, the median year was 2058. So the median prediction has come down by two decades in the last two years. Worth considering those updates may continue.

Here’s another visualization of the same data by ARK in their latest Big Ideas report. Basically, if you account for the change in forecast over time, it points to an even shorter timeline as soon as 2026!

Of course, the R&D is hardly ever a straight line to the finish. Some thought it could be a straight line to AGI after AlphaGO became superhuman in 2016. That didn’t happen. The advances in the past two years since ChatGPT have been nothing short of incredible, but can we keep scaling the current paradigm all the way to AGI? If there is no wall, then AGI could indeed be imminent.

Then again, these aren’t necessarily experts in the domain. When looking at such trends from the outside, we could easily be extrapolating in either direction: too soon or too late. So what do the actual insiders say?

AI researcher predictions on AGI

If we look at insider sources, here’s a study from January 2024 that interviewed 2,778 AI researchers about the future of AI. There are tons of interesting findings in the report, such as their own definitions for High-Level AI and Full Automation of Labor, but what caught my interest especially is this breakdown by capabilities. Instead of abstract benchmarks like GPQA or MMLU, these are mostly common human tasks we can all relate to like folding laundry and building LEGO.

While this doesn’t equate to a singular definition, what’s apparent is that for most of these tasks, the 50th percentile is before 2030, and the rest are before 2033.

Okay, so that’s consistent. For the most part, these guys and gals are just cogs in the research machine. Sometimes you don’t see the forest from the trees. What about the leadership that is setting that vision and roadmap?

AI leaders for AGI by 2030

Let’s explore some important figures and their views on AGI timelines. Beyond the domain of science fiction, if you had to pick one person as the originator of how we think about AGI, you might as well pick Ray Kurzweil. While famous for his many predictions about technology, this one might turn out to be the most consequential. This is what he predicted in 1999.

“2029: It is difficult to cite human capabilities of which machines are incapable.” - Ray Kurzweil, 1999

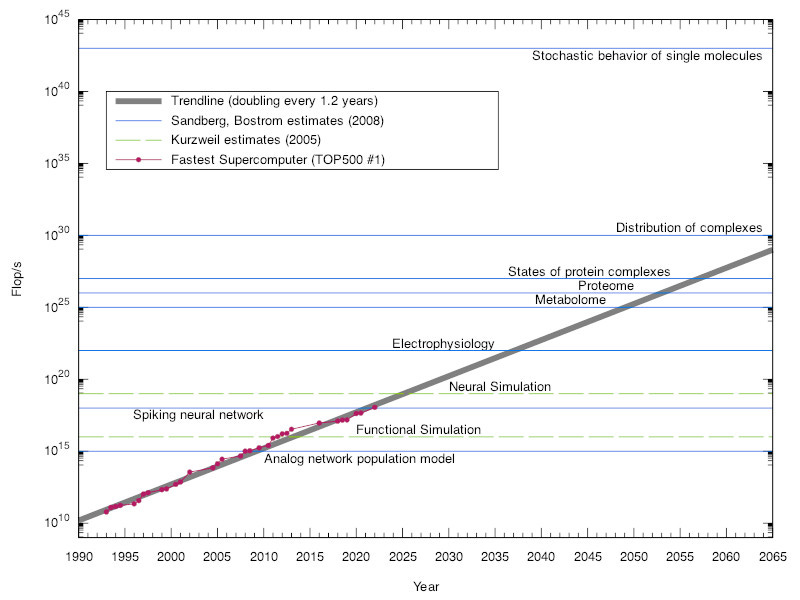

In some sense, Ray’s thesis is very straightforward. He’s just extrapolating from Moore’s Law to guesstimate where we hit the compute threshold of the human brain.

His thesis was further elaborated in 2008 by famous AI thinkers Anders Sandberg and Nick Bostrom. Which of the above thresholds turns out to be the one? That’s an empirical question, and we should find out soon enough.

While Demis Hassabis is now famous for winning the Nobel prize, his Deepmind co-founder Shane Legg has been thinking about AGI for a long time. In fact, inspired by Kurzweil’s predictions, his doctoral thesis was titled “Machine Super Intelligence“.

“I think there's a 50% chance that we have AGI by 2028” – Shane Legg, October 2023

Nowadays, Legg and Hassabis are part of Google. Here’s a video clip of Eric Schmidt, former CEO of Google talking about how “a few turns of the crank” are all that’s needed to get to AGI (source). Worth noting he has personally invested in several competing AI labs including Anthropic, Mistral, World Labs, Magic, as well as some Chinese AI projects. Talk about hedging your bets!

As we explored last time, the next turn of the crank is expected to turn out models like Claude 3.5 Opus, GPT-5, Gemini 2, and Grok 3. These were all expected around the end of 2024 or latest early 2025.

We end with one of the most interconnected and controversial figures in AI: Elon Musk. It’s well known that he was one of the very first investors in Deepmind alongside long-time collaborator Peter Thiel. After the Google acquisition, Elon felt great anxiety about handing over the keys to AGI to former friend Larry Page. The result was OpenAI. The rest is history, which thanks to Elon’s latest lawsuit is becoming public thanks to email evidence.

“Is it smarter than any human next year, or is it two years or three years, but it’s not more than five years, that’s for sure” – Elon Musk, March 2024

Now with xAI, Elon finally has his own horse in the race.

Summary: AGI seems probable by 2030

If you put all of this together, the evidence seems quite strong. Consistently, from a variety of sources, we are seeing dates before or around 2030. Whether you extrapolate scaling up compute, examine trends in prediction markets, or listen to leading voices of the field, it’s all pointing to this time horizon. Remember that two of the main agents in this game in Sam Altman and Dario Amodei, are predicting even sooner.

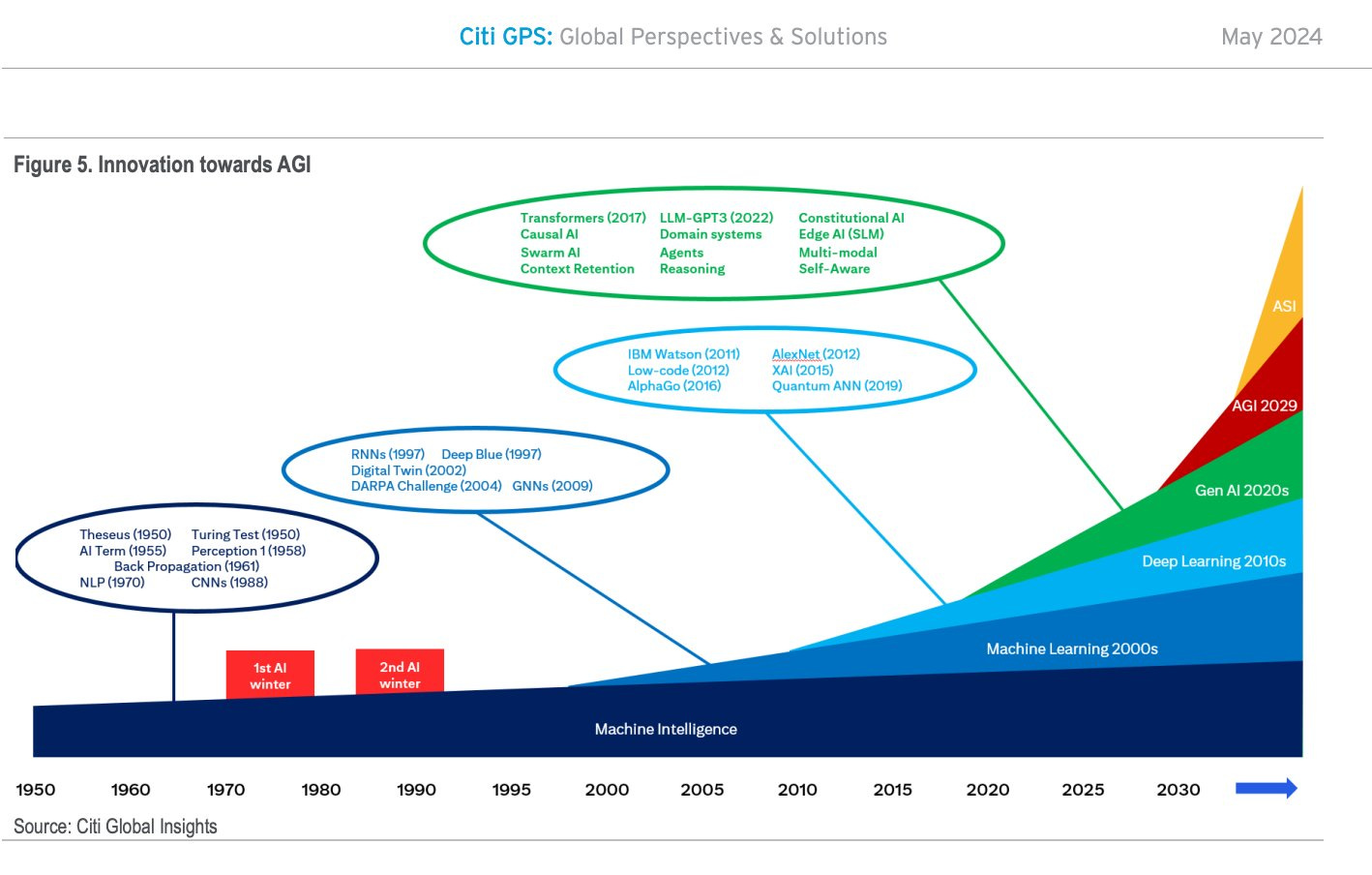

In case you feel these people are all just delusional and part of a Silicon Valley cult, here’s a report from Citibank in May predicting AGI by 2029.

In the past two years, AGI has moved from the realm of science fiction into serious academic study and commercial execution. Nvidia’s surging stock price is one such leading indicator. As we inch closer and closer to the threshold of a superior machine intelligence month by month, I expect AGI to become a regular discussion in board rooms across the globe, and eventually government cabinets. That’s another story for another time.

Yet there are those who disagree. Who isn’t predicting AGI by 2030, and why? Well, let’s look at that next time in the final installment of this timelines miniseries.