The philosophical debate at the core of OpenAI, our path to AGI, and humanity's future

How firing Sam Altman is just the latest chapter in a story for control of humanity's future

The biggest headline in AI history is now the firing and re-hiring of OpenAI CEO Sam Altman, all over the course of one weekend in 2023.

Interestingly, this story begins with the origin story of OpenAI itself. Elon Musk recently described on the Lex Fridman podcast how a fundamental disagreement about AGI ended his friendship with Larry Page. Larry wanted to accelerate AGI at any cost with DeepMind, and Elon wanted to do it responsibly. Shockingly, Elon claims that Larry was willing to concede the future of humanity itself to AGI, as the next step of evolution from biological to silicon-based intelligence. The outcome: Elon started OpenAI to focus on the safe development of AGI.

At its core, this philosophical debate, now escalating into potentially open economic warfare, is about how we should approach the coming of Artificial General Intelligence (AGI). The two sides are represented by niche philosophical views held by many Silicon Valley insiders: Effective Altruism (“EA”) and Effective Acceleration (“e-acc”). Sam Altman wasn’t fired on this basis outright, the reasons are reported to be more about focus on commercialization versus pure research, but the underlying themes match the fundamental tradeoffs between speed and safety.

I set out to write a simple article stating these two views, but this story turned out to become a piece of investigative journalism. It’s all intertwined and rather spicy.

Let’s begin, chronologically, with EA.

The Effective Altruists

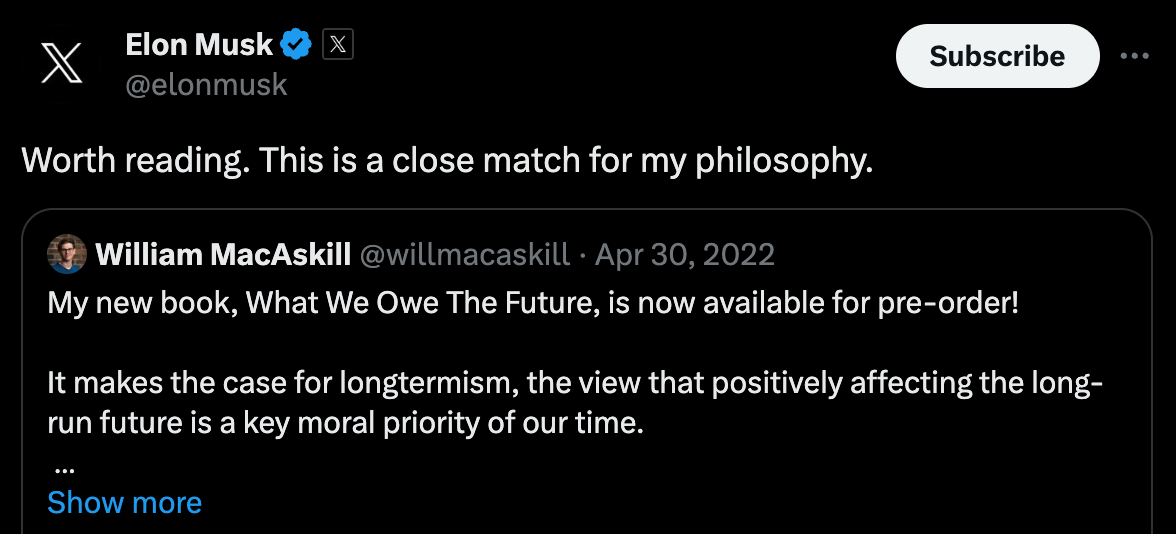

If you’ve heard of Effective Altruism (EA), it’s probably in connection with another famous Sam, the now infamous Mr. Bankman-Fried. SBF loved him some EA and was a prolific funder for many leading EA organizations. Before all that, let’s go to the beginning. What exactly is EA and where did it come from? Let the man himself, Will MacAskill, tell you all about it.

One key assumption central to the EA community is that we may have a great future ahead of us, but we must place great moral value on future generations whose very existence may depend on the decisions we make today. It’s a type of longtermist Utilitarianism. In this context, AI has the potential to disrupt and even cut off potential grand futures of humanity. It’s then no wonder that AI Safety and AGI have become major research and grant-making initiatives within the major EA organizations.

Since his rise to fame and some fortune, MacAskill bought the SBF story, hook, line, and sinker. They even set up the now-defunct FTX Future Fund to usher in an age of abundance for all of humanity. That was poor judgment, as it turns out. Read the juicy details in this Time article, if interested. Despite the embarrassment of being an SBF acolyte, he’s still very much at the helm of many EA initiatives alongside his academic work at Oxford.

Elon Musk

SBF wasn’t the only tech prodigy to associate himself with EA and MacAskill. Here’s another guy you might have heard of.

Elon has a complicated relationship with OpenAI, to say the least. He funded an initial $40 million in grants for the then non-profit research lab, taking no equity, and came up with the name. According to this report, he tried to run it himself, and having failed to win support from the leadership, bailed. The OpenAI website states “Elon Musk will depart the OpenAI Board but will continue to donate and advise the organization. As Tesla continues to become more focused on AI, this will eliminate a potential future conflict for Elon”. Yeah, maybe.

No need to tell you anything further about Musk at this point, but as you’ll see he does pop up here and there in the narrative, and clearly following along with the story actively the past few days. While not officially aligned with any direct EA cause or organization, we can clearly see that Elon is well-aligned with the approach and values.

The OpenAI Board

Now, for the main event. Who are these people that have caused such great chaos in the world of AI? In this article from a week ago, OpenAI is quoted as saying “None of our board members are effective altruists”. Hold the thought.

Ilya Sutskever

In a recent interview with Lex Fridman, Elon Musk shared an anecdote about the hardest recruiting battle he’s ever fought. It was against DeepMind’s Demis Hassabis, and the target was Ilya Sutskever. Elon then goes on to credit Ilya with hiring much of the early team at OpenAI. In some respects, as it comes to the science of AI, Ilya is OpenAI. What he says carries weight among the people who know AI. Clearly, he has also been central in the board-driven chaos we’ve just witnessed, though eventually taking sides with Altman.

A few months ago, Ilya tweeted this cryptic message, which was promptly seconded by Elon Musk. I wonder what conversations took place before this sequence.

In the context of OpenAI, if there is a spectrum from commercial to safety, Ilya represents the safety end of the spectrum. The very end. Well maybe not Eliezer Yudkowsky levels of concern for AI risk, but clearly very concerned. His PhD supervisor was none other than Geoff Hinton, who recently left Google to focus on voicing concerns to the media and industry about AGI and AI risk. At OpenAI, Ilya has been recently focusing on a project called Superalignment, where up to 20% of all compute resources are used to bootstrap and accelerate not “capabilities”, but safety research.

The reasons for his concerns are not academic, but practical, as he is one of many researchers who have revised their AGI timelines from decades to mere years in the future. Crowdsourced estimates started closer to 2100 just two years ago, but are now down to 2032, just over 8 years away. It’s like an asteroid is heading for Earth, and people are refusing to look up.

Anticipating the arrival of this all-powerful technology, Sutskever began to behave like a spiritual leader, three employees who worked with him told us. His constant, enthusiastic refrain was “feel the AGI,” a reference to the idea that the company was on the cusp of its ultimate goal. At OpenAI’s 2022 holiday party, held at the California Academy of Sciences, Sutskever led employees in a chant: “Feel the AGI! Feel the AGI!” - The Atlantic

If that wasn’t enough to solidify himself as the high priest of AGI, then…

For a leadership offsite this year, according to two people familiar with the event, Sutskever commissioned a wooden effigy from a local artist that was intended to represent an “unaligned” AI—that is, one that does not meet a human’s objectives. He set it on fire to symbolize OpenAI’s commitment to its founding principles. - The Atlantic

We can summarize: Ilya cares about AGI safety first, and not much else. He’s never officially declared for the EA cause, possibly because they care about other things than AGI, and Ilya doesn’t. Even still, his perspective with AI risk is very much aligned with the EA consensus.

From what we’ve come to know about the board drama and Ilya’s role as the pivotal vote, my take is that Ilya wants to focus on AGI and only AGI. Something like ChatGPT, while helpful for the company and its growing need for resources, isn’t directly AGI related. In some ways, the more popular OpenAI products and services are, the more resources will go toward rather mundane needs, such as scaling infrastructure and working with enterprise customers. It’s reasonable to assume, that from Ilya’s perspective these are dangerous side quests that waste precious time and resources that should be spent on the main storyline: AGI.

Tasha MacCauley

As if this story couldn’t be more random and bizarre, one of the lesser-known independent members of the OpenAI board is tech entrepreneur Tasha MacCauley. If you have heard of her before, it’s more likely to have been due to her association with her celebrity husband Joseps Gordon-Hewitt. Actually, she’s a diehard EA and currently serves on the board of The Centre for Effective Altruism, is a trustee of the 80,000 hours foundation (great podcast), and board member of Effective Ventures, which owns the aforementioned organizations. Former CEA board members include Sam Bankman-Fried.

Helen Toner

That brings us to Helen Toner, very much at the focus of recent events. Who is she, and how did Helen Toner end up on the board, you might ask. Not a famous Silicon Valley founder, not married to a celebrity. Instead, she works on policy issues in Washington at CSET, focusing on AI. Here she is some 9 years ago talking about Effective Altruism on TED.

More relevant to the narrative, she recently co-authored a paper titled “Decoding Intentions - Artificial Intelligence and Costly Signals” which is reported to have upset Sam Altman. His ire comes from the paper’s criticism of OpenAI in favor of rival Anthropic. Where OpenAI is positioned as being more cavalier on safety by launching ChatGPT spurring “frantic corner-cutting“, Anthropic is applauded for delaying their launch of Claude, a competing platform. Fair assessment, or a nice sob story to justify being outcompeted by OpenAI? It’s obvious what Sam thinks.

“A different approach to signaling in the private sector comes from Anthropic, one of OpenAI’s primary competitors. Anthropic’s desire to be perceived as a company that values safety shines through across its communications, beginning from its tagline: ‘an AI safety and research company’.” - Helen Toner & CSET

Helen arrived on the board of OpenAI to replace Holden Karnofsky of OpenPhil, one of the leaders of the early EA movement. Helen worked for Holden at OpenPhil for a few years. OpenPhil, started and funded by Facebook co-founder Dustin Moskowitz for EA-style grantmaking at scale, granted $30 million to OpenAI in 2017, and took a board seat to ensure proper governance. Cough. Holden also wrote a 12-part blog series modestly titled “The Most Important Century”, which outlines the following framework that is quite relevant to the topic at hand.

Holden left the position in 2021, as his wife happens to be the co-founder of OpenAI’s safety-oriented competitor Anthropic. In the relationship disclosures section of the grant, it actually says “OpenAI researchers Dario Amodei and Paul Christiano are both technical advisors to Open Philanthropy and live in the same house as Holden. In addition, Holden is engaged to Dario’s sister Daniela”. You can’t make this stuff up.

“Given our shared values, different starting assumptions and biases, and mutual uncertainty, we expect partnering with OpenAI to provide a strong opportunity for productive discussion about the best paths to reducing AI risk.” - OpenPhil

While not necessarily reported as such, it seems quite clear that Helen is on the board to uphold OpenPhil’s role on the board. It’s noteworthy that OpenPhil has also supported Helen’s current project at CSET.

Anthropic, a safer OpenAI?

One interesting branch of this whole narrative is Anthropic, arguably the #3 AI Lab in the world right after OpenAI and DeepMind. I already noted the historical relationship to OpenAI’s board, but the story runs deeper. This 2020 expose of OpenAI’s early team highlights the change in culture that the Microsoft investment and conversion to for-profit caused among the believers. One such believer was head of research, Dario Amodei, aforementioned bunkmate of EA mastermind Holden Karnofsky. Dario is very much a part of the EA community, even in the early days.

With a small but merry band of other true EA believers, he founded a direct competitor to OpenAI that was going to be more safety-focused. Guess non-compete isn’t a thing in AI? Notable investors included Jaan Tallinn, of Skype fame and believer in EA, and wait for it… one Dustin Moskowitz we already mentioned as having an influence on OpenAI’s board through an EA-driven OpenPhil grant. Oh, and former Google CEO Eric Schmidt. Fun fact, he recently also invested in Mistral, the French challenger. Hmm… Eric must really want AGI or a seat at the table.

Anthropic has been quick to establish itself, by defining an approach they call Constitutional AI, that seeks to mitigate the commonly known issues with current AI alignment such as hallucination and bias. It’s also reported by The Atlantic that ChatGPT was a direct panic response to Anthropic planning to launch its own chatbot, Claude. OpenAI beat Anthropic to the market by 4 months, and those decisions continue to have repercussions.

In terms of the hit to OpenAI’s unprecedented trajectory, one winner seems to be Anthropic. Conveniently, recent investors now include Microsoft’s direct competitors AWS and Google. In terms of governance, there are differences and similarities. Just recently, Anthropic announced it was developing its own governance structure which seems no less complex than the infamous spider web of OpenAI. The Anthropic Long-Term Benefit Trust is an “independent body comprising five Trustees with backgrounds and expertise in AI safety, national security, public policy, and social enterprise”. Not mentioned, at least three of the five trustees are directly associated with EA institutions.

So, if these are the people trying to steer us toward a safe future with AGI, what does the opposition look like?

The Effective Accelerationists

This is where the story took a weird and unexpected turn for me. I was well aware of the EA movement and the broad positions around AGI, but I had never heard of Effective Acceleration (“E/Acc”) which came up around tweets after Sam’s firing was announced.

Now, given the recency and meme-nature of this offshoot branch of EA, I think it’s fair to say we cannot specifically say any one person is a follower of the specific tenets of how E/Acc is being defined. Instead, we should stick to some broader definitions of what it means to not be perfectly aligned with the risk and safety long-termist approach of the EA community and OpenAI board.

David Deutsch is a prominent physicist and philosopher and shared his perspective on both EA and E/acc on a recent podcast. Paraphrasing, the main assumptions of accelerationists are:

Biological life is a method for seeking free energy and increasing entropy, according to the laws of thermodynamics.

Capitalism allows higher-level organisms to seek and optimize resources.

Consciousness is substrate-independent and emergent from intelligence.

AGI will therefore maximize consciousness in the universe.

We should accelerate AGI at any cost.

Rather unexpectedly, Deutsch was very critical of EA in terms of their definitions of things like problems and solutions, and what can be known. He basically invalidates the whole premise of picking and ranking concerns, and even donating your disposable income. Instead, it seems Deutsch is broadly pro-acceleration, going so far as agreeing with the above five assumptions as being quite aligned with his view of physics. If you want to it from the horses mouth, and can put up with non-stop tech bro jargon for two hours straight, you can listen to this interview with the anonymous founders of the E/Acc movement Bayeslord and Beff Jezoz.

These people refer to EAs as “decels”, short for deceleration, the opposite of what they want. People shouting for safety-first approaches like Max Tegmark, Eliezer Yudkowsky, or the inventor of modern AI Geoff Hinton, are all labeled “doomers”, to make their concerns sound frivolous. In the broader context, if EAs are long-termists trying to maximize a wide range of values for human flourishing, then the E/Acc crowd is more concerned about the total utility function of consciousness, whether biological or not in nature.

Remember where we started this whole story, with Elon and Larry? Well, according to this criteria, Larry is 100% pro-acceleration. Marc Andreessen recently wrote a very techno-optimistic view about how we should think of the future where AI is beneficial and all is well with the world. Known to be against any form of regulation, he also literally has E/Acc written on his Twitter bio.

In terms of other card-carrying E/Acc believers, just look at Twitter handles with “e/acc” after their name. Garry Tan (President and CEO, of Y Combinator) stands out among seemingly an endless list of at least hundreds if not thousands of Bay Area founders, engineers, and investors. I even see people on LinkedIn adding this mysterious label to their profiles. It’s a real thing, and it’s catching on.

The OpenAI Board

Back to our line of suspects. Who is left that hasn’t declared for the EA cause, and what can we tell about their stance on acceleration?

Adam D’Angelo

Adam has seemed to be more of a side character in this situation. He’s an established Silicon Valley veteran and CEO of Quora. I haven’t seen much in terms of direct commentary on AI safety or AGI, so I’d assume Adam is more neutral than Ilya, Tasha, and Helen. If not openly in favor of the E/Acc movement, his commercial focus certainly places his more on the speed-first end of the spectrum alongside Sam and Greg.

One somewhat random theory that came out amidst all the speculation was that Adam was blindsided by the GPT Store announced at the recent OpenAI Dev Day, since he had just announced something similar under Quora’s own Poe AI platform.

Given the fact that Adam is the only board member to have survived this reshuffle, I would safely squash those rumors. Poe also runs ChatGPT and GPT-4, so to me it seems Quora is more of a partner and customer than competitor to OpenAI.

Sam Altman and Greg Brockman

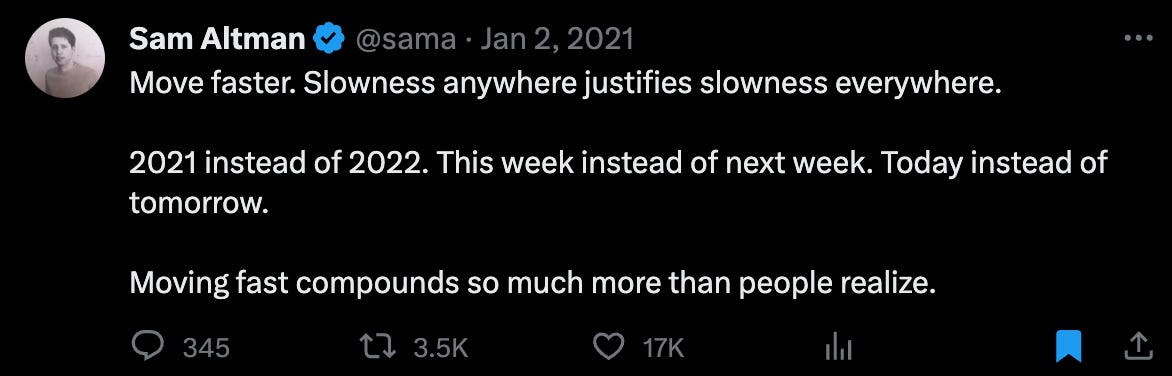

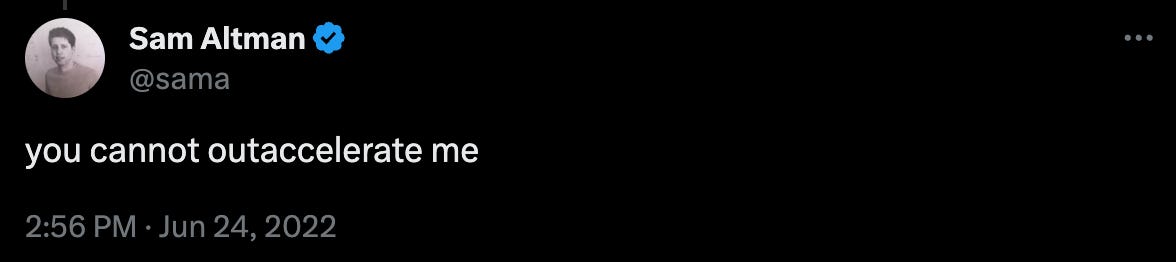

Then where do the leading duo of OpenAI land on these issues? Well, the best we can do is look at some examples of public comments. It’s not fair to make specific claims for either gentleman, but I believe it’s quite safe to say that neither has shown direct affinity or alignment with the EA community.

Here is a series of three tweets from Sam Altman, exploring these topics.

Overall, Sam presents a very balanced view on the necessity and imperative for AGI, but that we should be conscious of risks and seek good outcomes. Then again, he also tweeted this response to one of the E/Acc founders.

My conclusion is that Sam leans toward acceleration, and Greg is his ride-or-die. They will accelerate, unless prevented from doing so. This is what happened last weekend.

This isn’t the end

So, we finally know how the story ends. It’s like waking up from a weird dream and everything is back to normal in a flash.

Sadly, the drama is just starting now as these two camps will diverge more openly, pun intended. Larry Summers is a former Treasury Secretary, and was president at Harvard when Mark Zuckerberg started Facebook. He recently made some high-level comments on the potential impact and risk of AI on Bloomberg Talks. Bret Taylor, new chairman of OpenAI’s board is a real Silicon Valley legend, doing big things at Google, Facebook, Salesforce and lastly Twitter’s board prior to Elon’s takeover. Interestingly, it was reported he only recently raised $20M for his own stealth AI startup. I can’t find anything on his specific views on AI, so that makes me think he isn’t too vocal on AI safety, specifically.

OpenAI’s board may have cleaned out the EA contingent for now, now tilting to the side of acceleration and commercialization, but core EA values are still held by many OpenAI leaders and employees, and quite broadly across the entire AI landscape from labs to startups and policymakers.

Where to from here? Well, Ben Goertzel, AI researcher and entrepreneur, whom I would safely place in the accelerators camp as a transhumanist, kind of nailed it. Capital will automatically flow to the "accelerators", unless regulators stop them.

The likes of Sam and Greg will continue to use their dealmaking prowess and leverage as the top dogs in AI to make bigger and bigger deals. Before this chaos started, they were rumored to be pitching Saudi oil money to build AI hardware to challenge the current monopoly of GPU specialist Nvidia. I have no doubt with the revamped board, only bigger and faster things await OpenAI. The foot will be firmly back on the gas pedal, probably with a vengeance.

Yet, this is why it took an Elon Musk to start OpenAI in the first place. There was zero incentive to make any money with the original non-profit model, and Elon wasn’t expecting a return of any kind. The goal was strictly to research and navigate a safe path toward AGI. In some sense, we’re back to square one now, left with more questions than answers.

Unresolved Questions

Effective Altruism - The EA community has taken this one on the chin, no doubt about that. Yet many diehards including Anthropic CEO Dario Amodei will look at this OpenAI debacle with grave concern. This could be a trigger for the EA AI community to double down and concentrate efforts to ensure the survival of the species.

Governance - Whatever OpenAI’s model was intended to achieve, it failed either way and is likely to be rewritten shortly. Does Anthropic have the answer? There are zero standards in place, so right now this is a free-for-all space until regulation or independent oversight is in place.

Regulation - Will we see an EU AI Act that excludes leading AI Labs from its scope, effectively cementing the incumbents in total regulatory capture? What impact do the upcoming presidential elections in the US have on the executive order made by Biden?

Microsoft - Satya very nearly pulled off the greatest acquihire in Silicon Valley history. For a moment, it seemed that Sam, Greg, and 580 OpenAI employees were on their way to Microsoft. Now that this scenario didn’t materialize, will Microsoft double down on its golden goose, or potentially seek to diversify? Satya speaks very eloquently about AI risk, yet reportedly plans to spend a cool $50 billion on building out bigger and bigger datacenters to fuel the path to AGI. That certainly sounds like accelerationist behavior to me.

Google - I won’t even mention Apple, who seems to be sleeping on the job with GenAI. Remember the days when DeepMind was the #1 AI Lab in the world, and Demis Hassabis was the poster boy of AGI? Well, their Reinforcement Learning approach worked wonders for narrow AI, but has been completely usurped by the Transformer model, invented at Google. Demis has been active, launching a subpar LLM in Bard, but he’s taking an awful long time to release their secretive Gemini model, which is rumored to combine Transformers and Reinforcement Learning for a best-of-both world approach. Surely, before Christmas?

Elon Musk - Twitter has been a big distraction, but clearly this situation has again piqued his interest into all things AGI and OpenAI. Yes, X.ai is there an launched Grok, but nobody would consider this a serious contender to OpenAI’s dominance. That could change faster than you can fire and reinstate Sam Altman, if Elon decides this is more important than free speech.

What are your takeaways or open questions from all this mayhem?